Fine-tuning Private GPT using Colossal-AI's Cloud Platform

4 minute read

Introduction

The emergence of ChatGPT showcased the potential of AI development. While general-purpose data shapes comprehensive large language models, they aren't flawless in specialized domains. To truly elevate their performance in specific fields, the process of fine-tuning, fueled by high-quality domain-specific data, becomes absolutely indispensable.

For enterprise customers, whose sights are firmly set on harnessing the power of AI, there exists a palpable and insatiable demand for domain-specific large models. These models offer a multitude of advantages, including lightning-fast generation and response times, which in turn significantly enhance productivity and cut down on operational costs. Accuracy is of paramount importance for enterprise users, driving their need for precise and professional AI. To achieve intelligentization and automation, businesses must train highly accurate models tailored to solve specific industry challenges.

Yet, the immense size of these models presents formidable challenges in training and fine-tuning. This process demands skilled engineering teams and substantial computing resources, while also extending over significant periods. Consequently, many enterprises are deterred from harnessing the benefits of AI models.

In response to this pressing issue, our revolutionary ColossalAI Platform emerges as the ultimate solution. This platform is underpinned by meticulous research and technological advancements, serving as a testament to its unparalleled efficiency and cost-effectiveness when it comes to large AI model training.

Visit Colossal-AI Platform: https://platform.colossalai.com/

ColossalAI Platform

ColossalAI Platform is a cloud platform designed exclusively for accelerating deep learning training. It provides robust support for computational power and model acceleration, significantly reducing training costs. It's the ultimate choice for training deep learning large models.

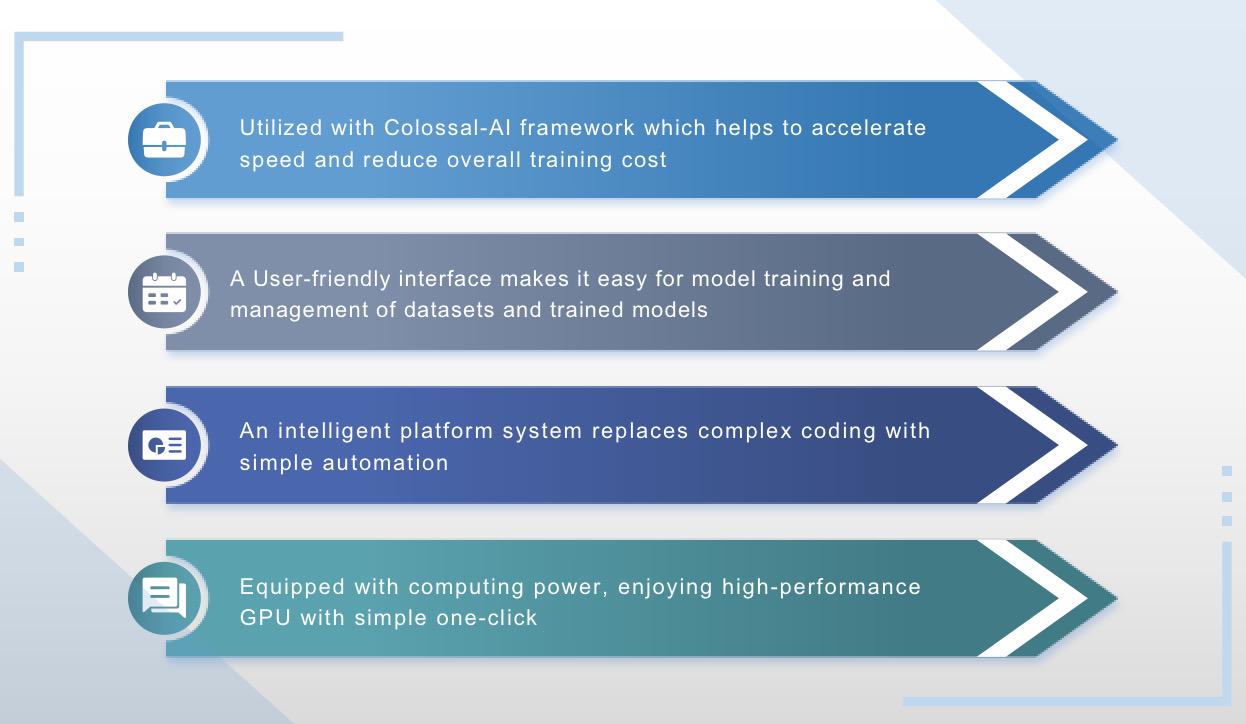

Colossal-AI Platform Advantages

In this tutorial, we will guide you through the process of training or fine-tuning an industry-specific LLM using our ColossalAI Platform. If you're interested, please follow these steps to witness how we assist you in overcoming all challenges – from initial environment setup to final deployment.

We will use an example of training LLaMA-2 to answer medical questions. You only need to use different datasets and training codes to train your own LLM. We also provide training templates which can further save you time.

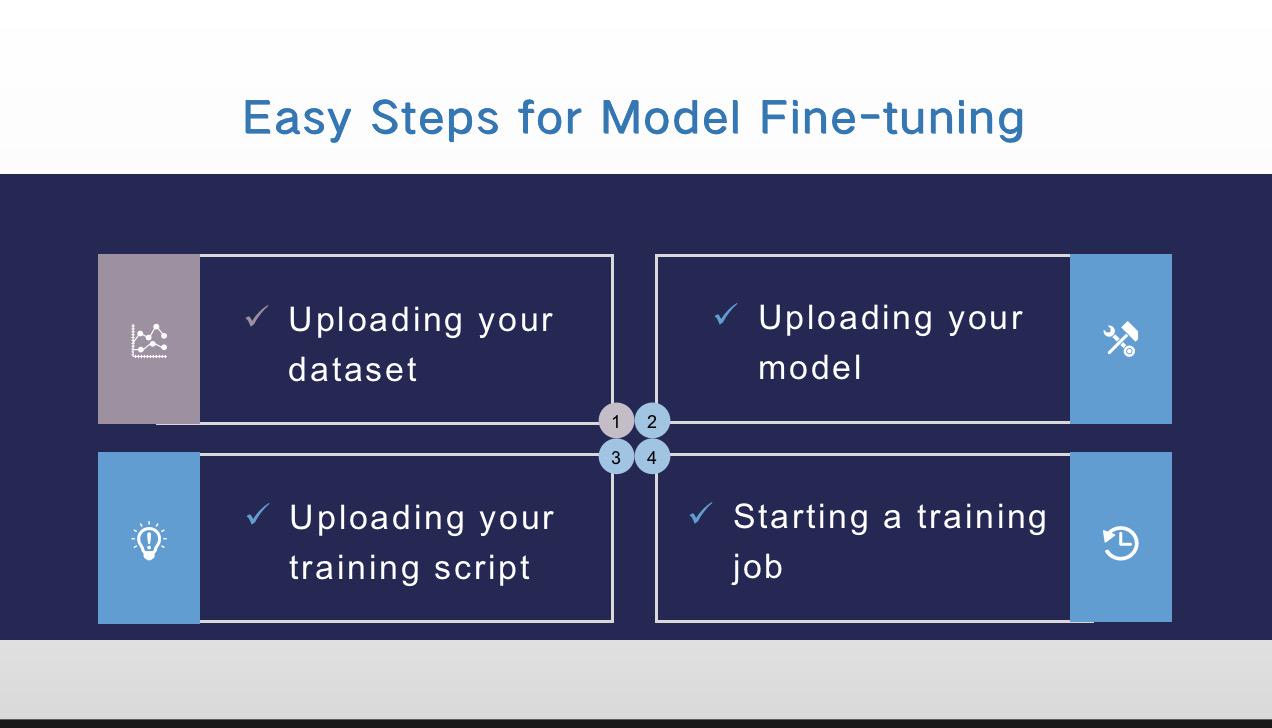

Easy Steps for Model Fine-tuning

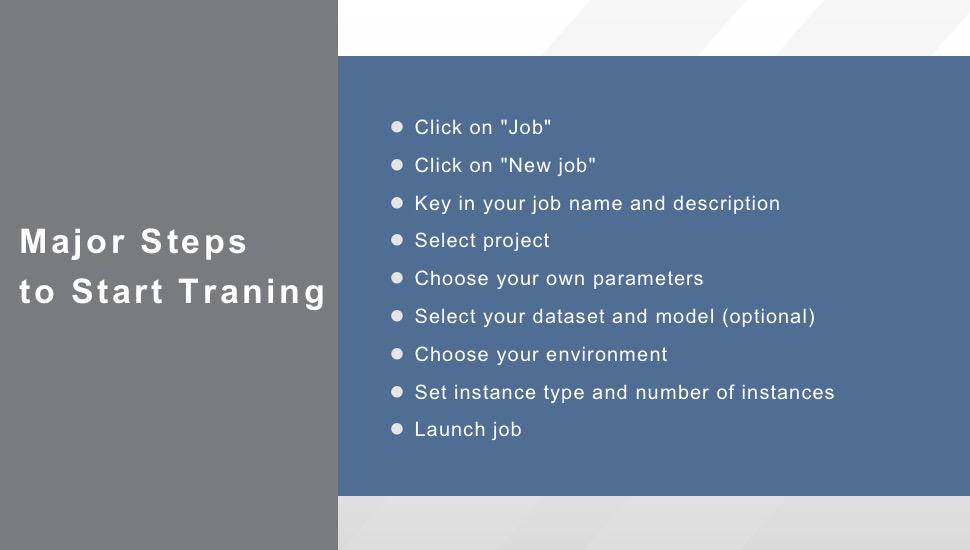

To complete the fine-tuning process, the main steps are shown below:

Steps for Fine-tuning

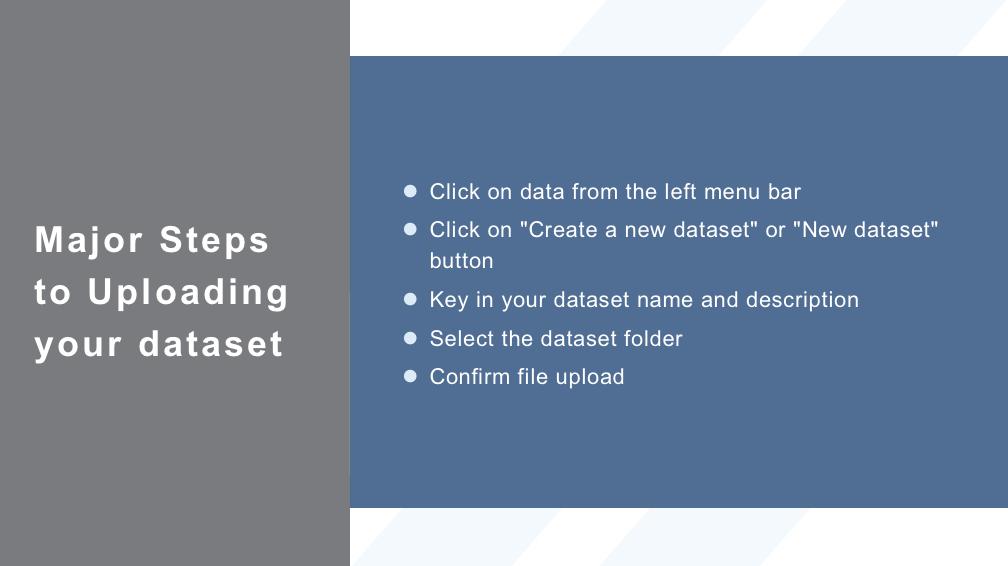

Uploading your dataset

Data plays a vital role in affecting your model performance. Selecting data requires careful consideration. Numerous high-quality datasets are available online, and you can also use your own dataset for training.

For example, we can download datasets from Hugging Face.

Here, we select shibing624/medical and download its English training data.

You can use

wget https://huggingface.co/datasets/shibing624/medical/resolve/main/finetune/train_en_1.json.After downloading it, we should preprocess the data using the following script.

python preprocess.py --dataset path/to/dataset --save_path path/to/save/dataset

import argparse

import json

def save(args):

data = []

with open(args.dataset_path, mode="r", encoding="utf-8") as f:

for line in f:

data.append(json.loads(line))

with open(args.save_path, mode="w", encoding="utf-8") as f:

json.dump(data, f, indent=4, default=str, ensure_ascii=False)

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--dataset_path', type=str, default=None)

parser.add_argument('--save_path', type=str, default=None)

args = parser.parse_args()

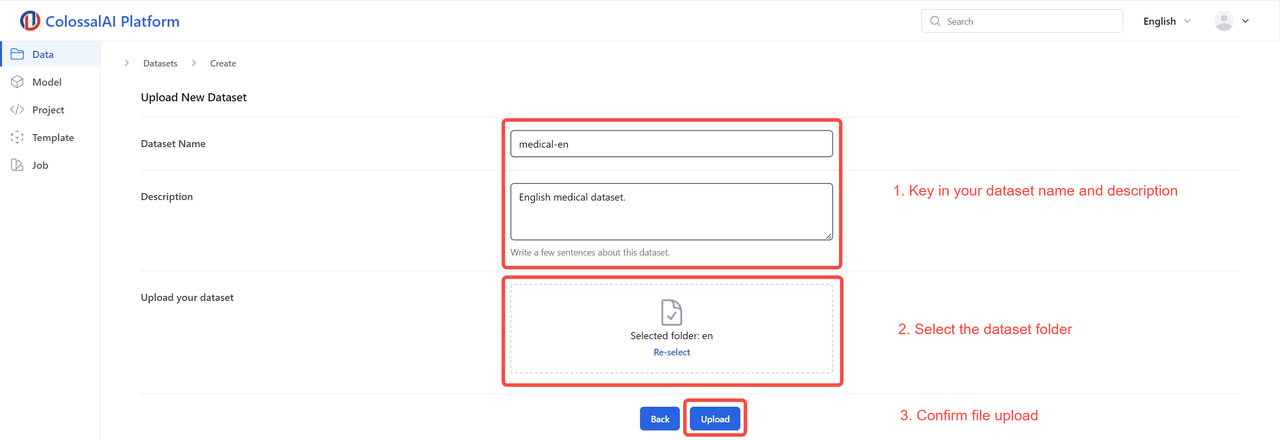

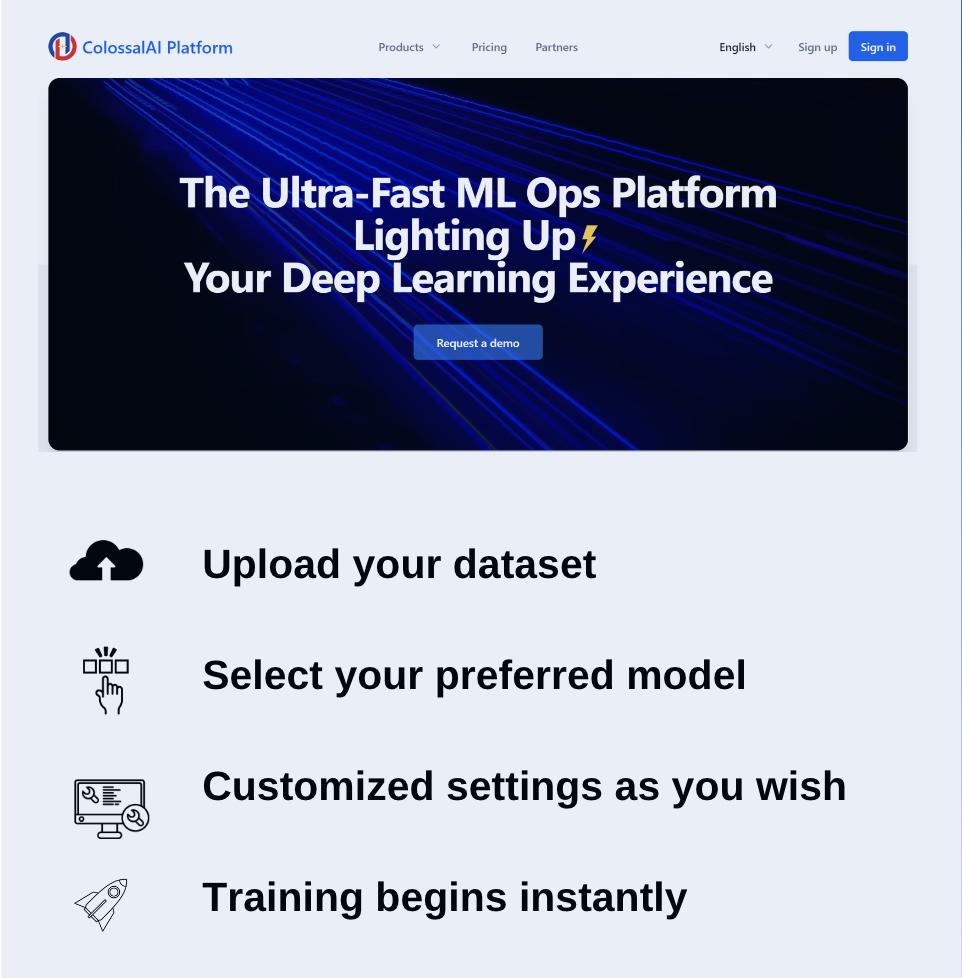

save(args)After preprocessing, you can upload your dataset to our platform. Follow the 5 steps shown in the figures:

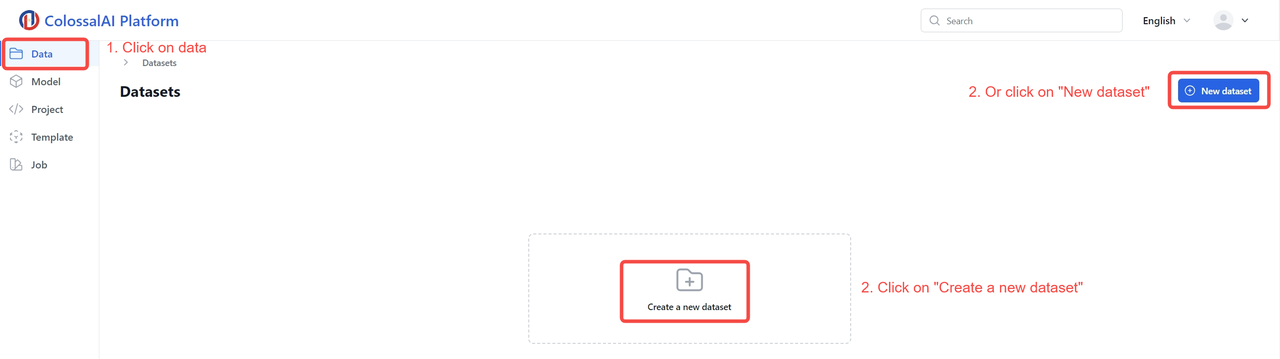

After the steps, you can view your uploaded dataset on the platform.

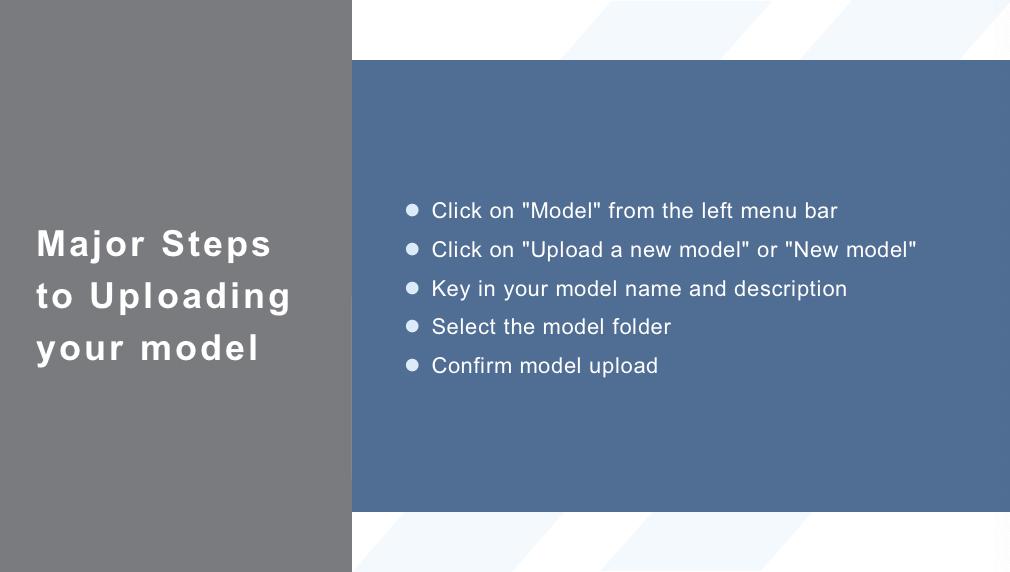

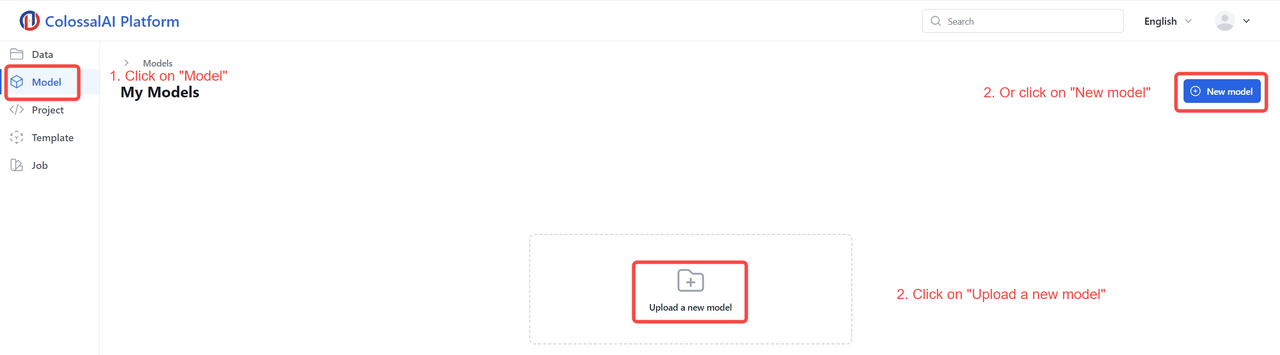

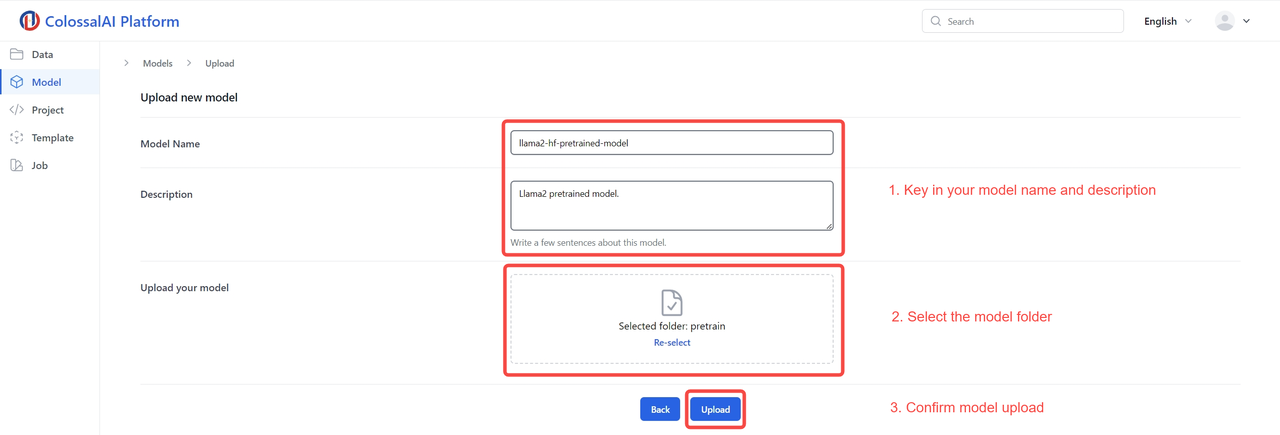

Uploading your model

Next, you need to upload the model before training. For fine-tuning jobs (such as this example), you need to upload a pre-trained model. We currently have pre-trained models such as Bloom, GPT, and LLaMA stored in our platform. You can directly use these models without uploading them. If you want to use other models, you can follow the steps below to upload custom models.

You will find your uploaded model under the folder.

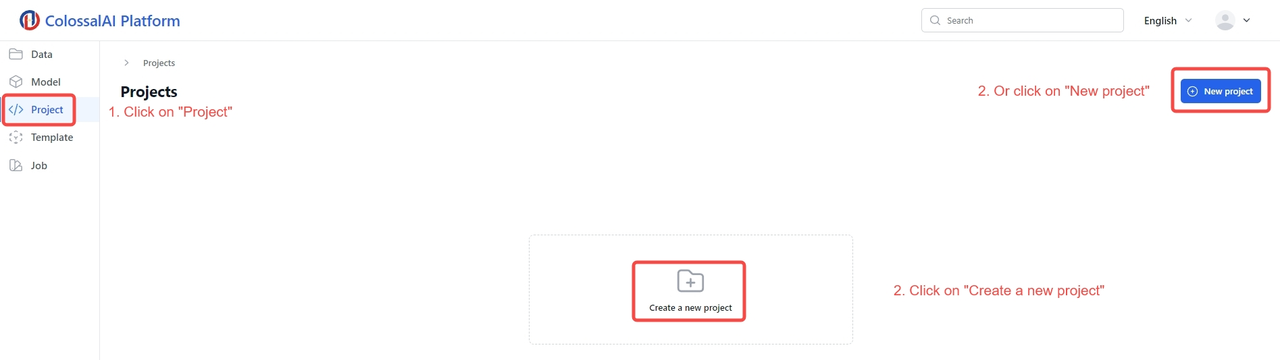

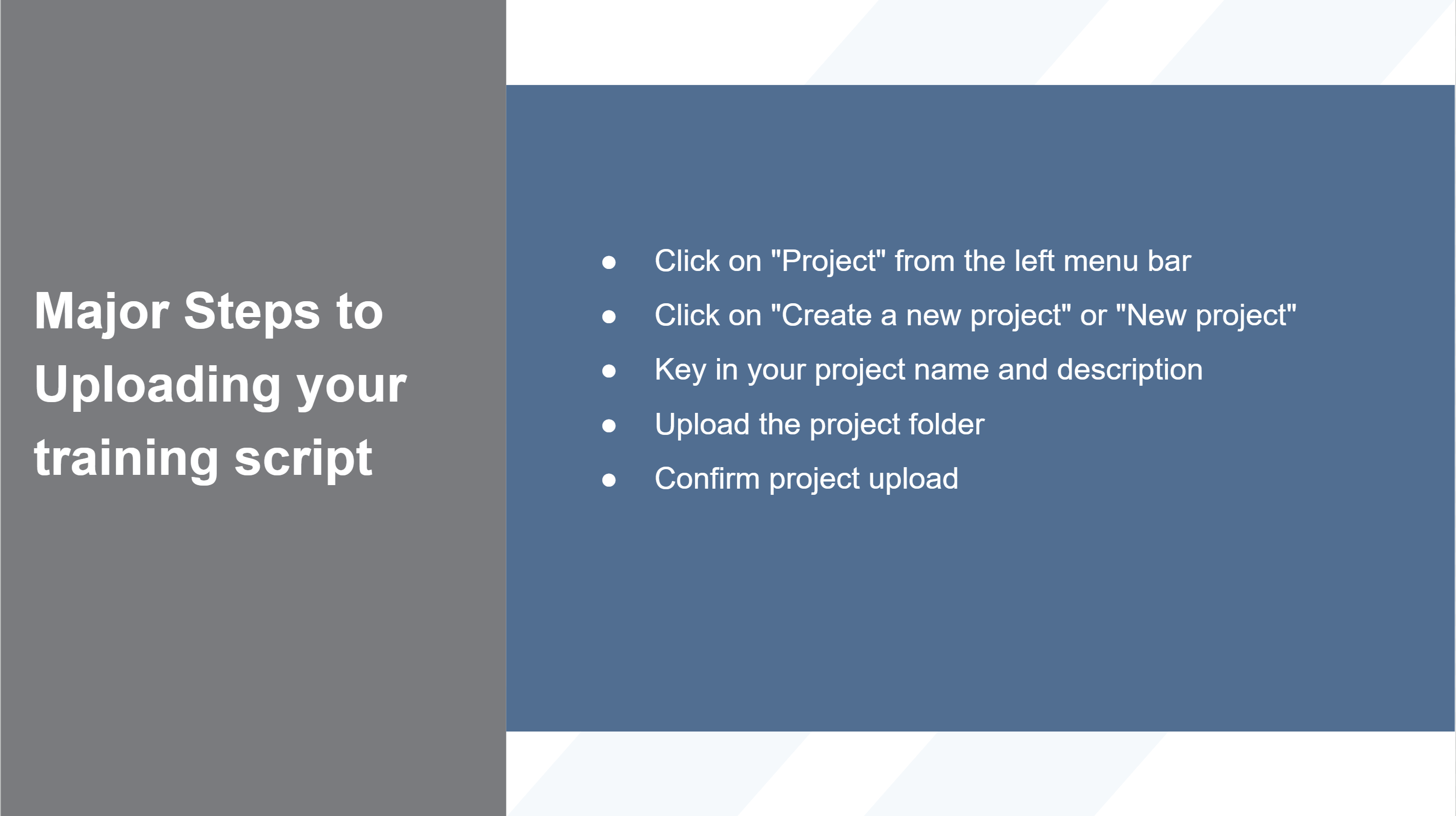

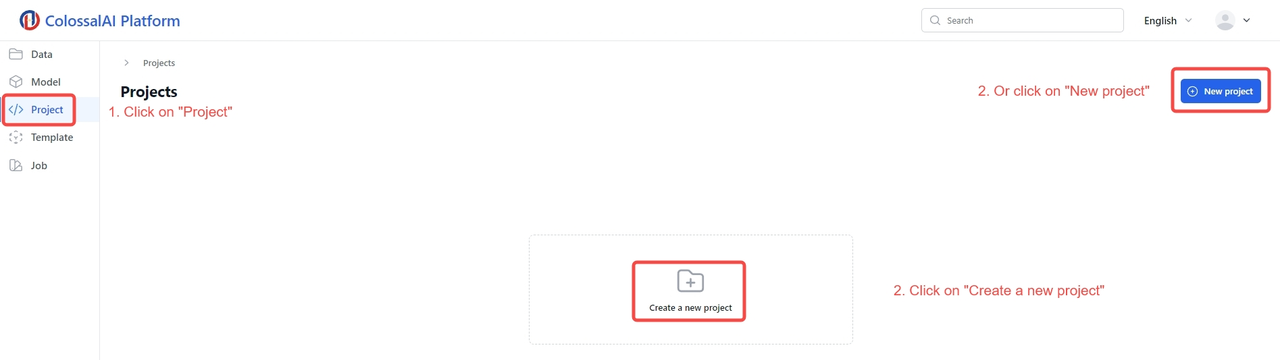

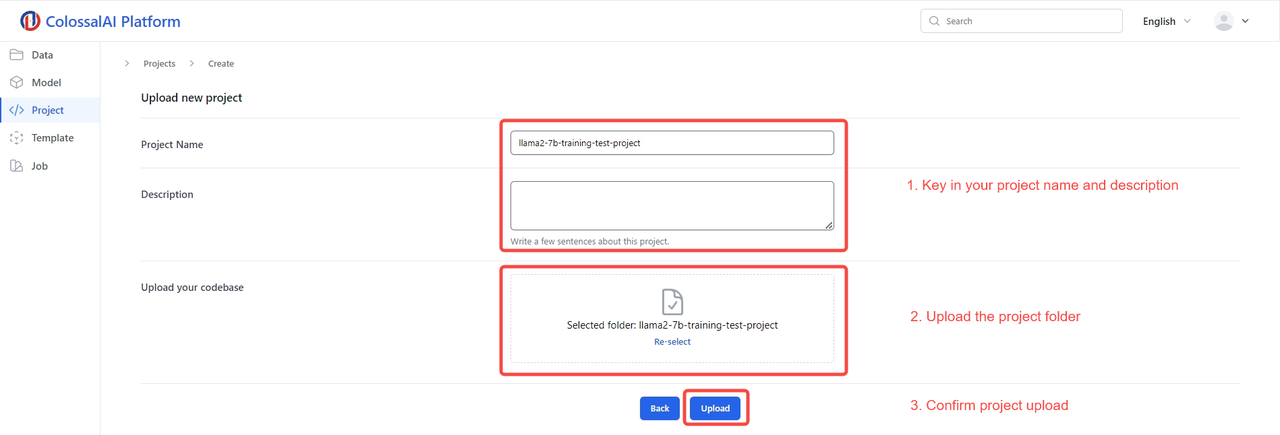

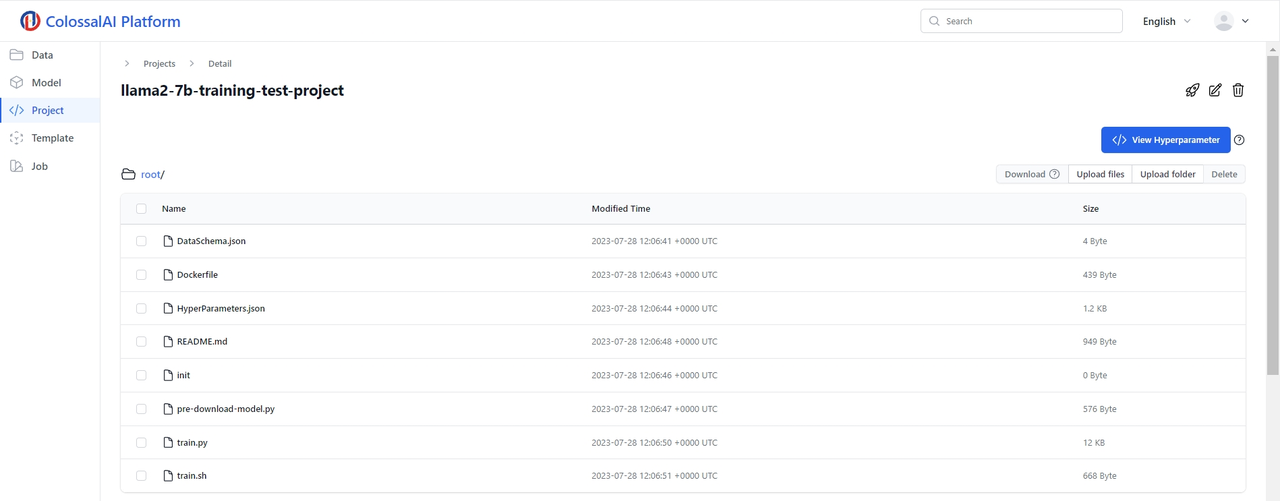

Uploading your training script

Once you have uploaded your model, the next step is simply to upload the training code. We have a training template and only minor modification is needed to suit your needs.

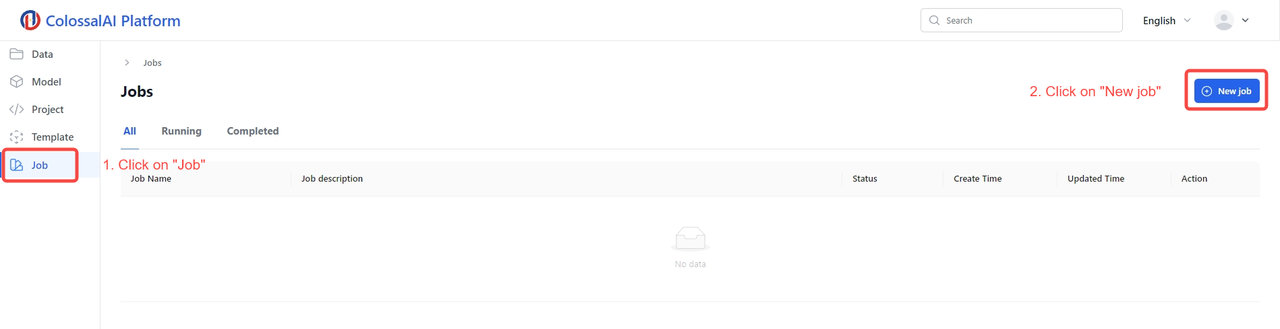

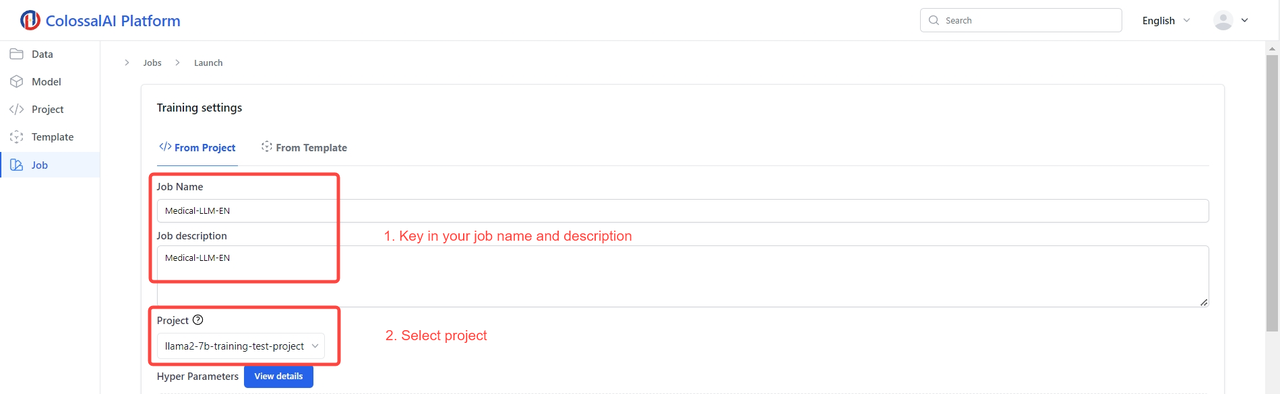

Starting a training job

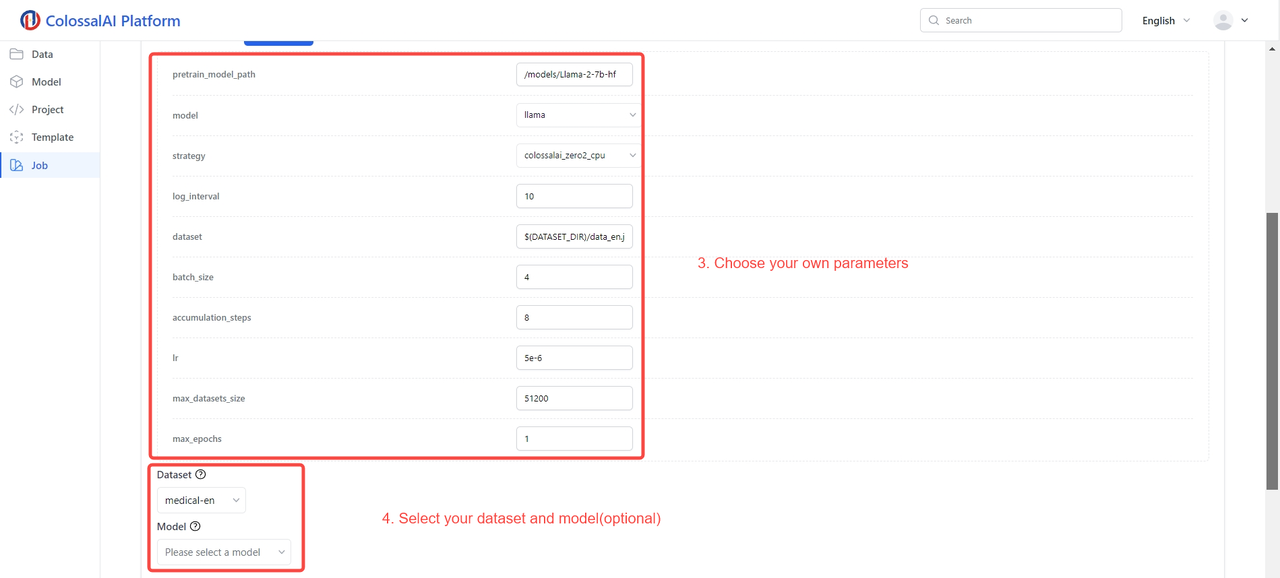

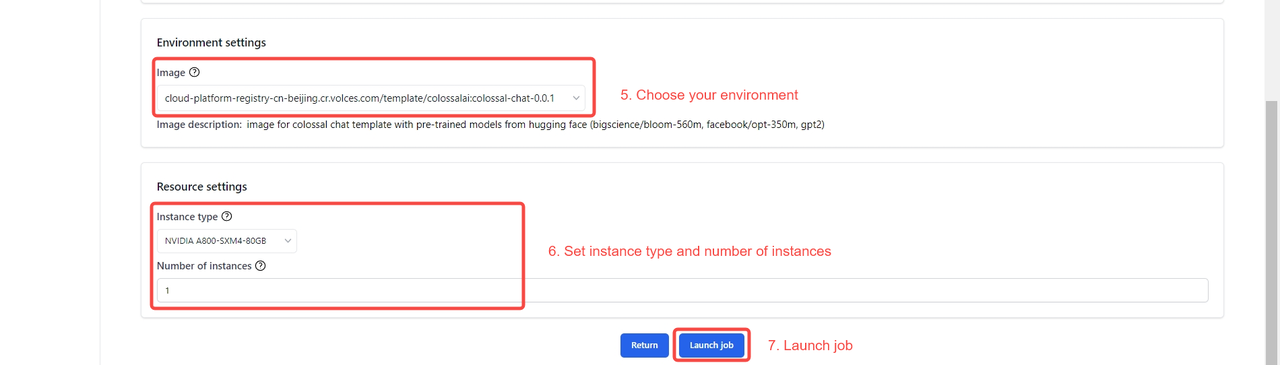

After the above preparation, you can start training the model. Just start the job and fill in your hyperparameters. You can follow the steps shown in the figures:

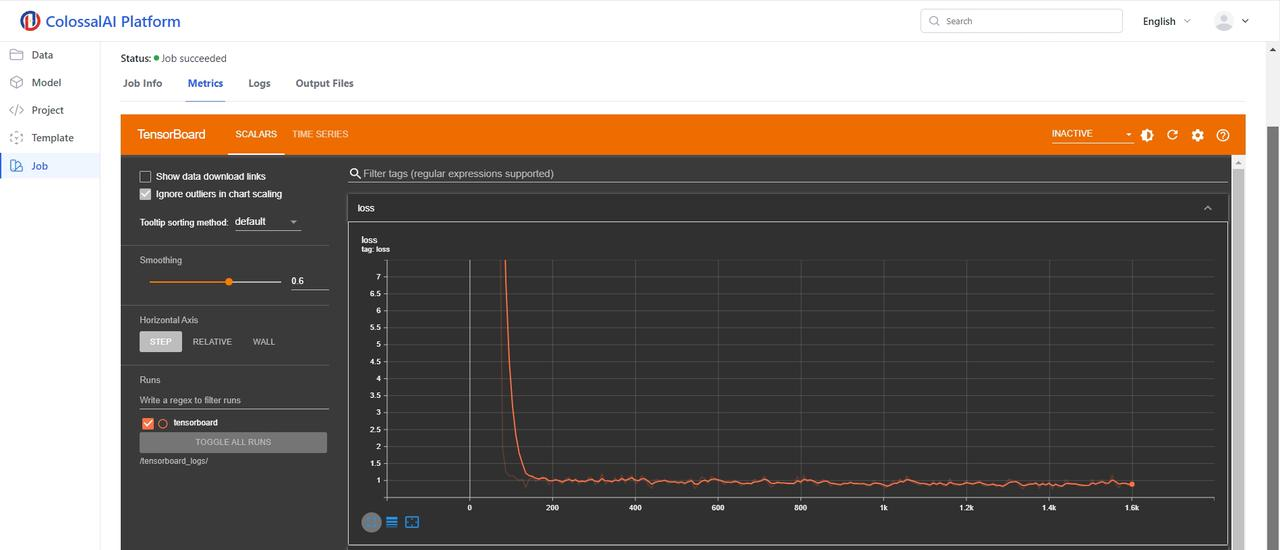

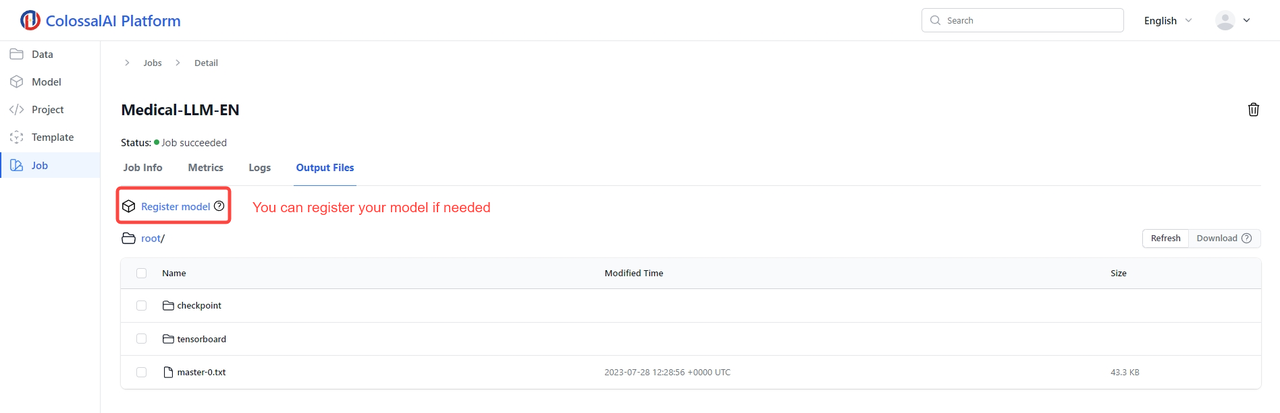

Then you can see the loss and the saved checkpoint.

You can register your model if needed and then you can load your model on our platform to do inference.

Inference

Our platform offers a diverse range of parallel acceleration methods and other optimization solutions tailored for inference tasks. These optimizations serve to significantly enhance the inference speed of your trained model while simultaneously reducing memory usage. As a result, when you deploy and utilize the model locally, you'll find that your hardware requirements are substantially reduced, leading to an overall improved user experience.

Following the steps outlined above, you can seamlessly upload your inference project and initiate a job for it.

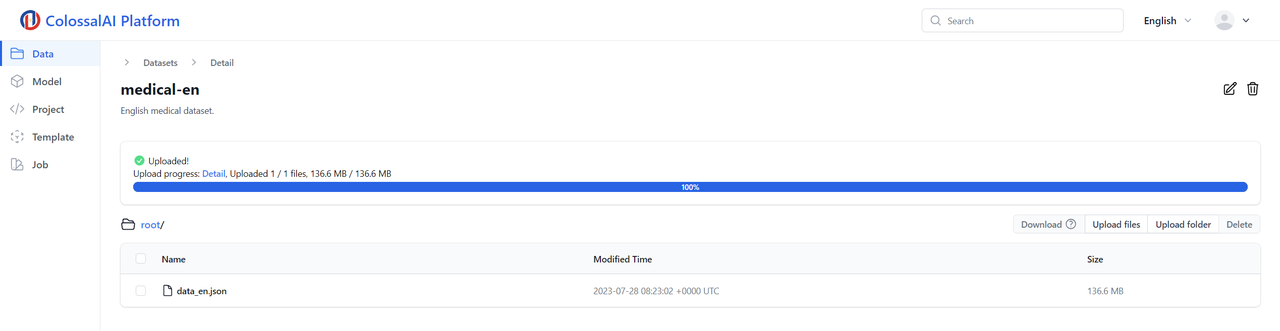

Here are the inference results:

Instruction:

Hi doctor, I have been experiencing joint pains and stiffness, especially in my knees.

Response:

Your symptoms could indicate inflammation in the joints or arthritis of the knee. To start with, it is important to diagnose the cause for the treatment depends on the exact cause. For further evaluation, a complete physical examination and some investigations including blood, x-rays, and imaging of the joints can be helpful to determine the cause. Sometimes, fluid obtained from the affected joint can be sent for laboratory tests to determine the exact cause, however, the tests can be invasive because a needle is inserted in the affected joint. Further diagnostic tests like synovial fluid culture can also be done to confirm suspected diagnosis.

Upon completion of fine-tuning, the trained model becomes adept at addressing medical issues and offering valuable advice to patients. In a practical inference scenario, let's consider a patient who presents with knee pain. The model can swiftly provide insights into potential causes for the joint pain and stiffness, all while suggesting relevant diagnostic tests to pinpoint the underlying issue. Furthermore, it offers insights into the risks associated with different tests, such as highlighting that a synovial fluid culture can aid in determining the exact cause but may involve some invasiveness.

In sum, the model exhibits impressive performance in addressing medical-related queries after retraining, delivering timely and effective responses. This success underscores the potential of harnessing AI to support and enhance the field of medical consultation, marking a significant stride forward in the application of AI in healthcare.

Conclusion

In conclusion, the ColossalAI Platform redefines the landscape of large AI model training, making what was once a complex endeavor remarkably simple. With Colossal-AI, there's no need for cumbersome environment configurations or delving into the intricacies of acceleration methods – you can achieve remarkable acceleration with just a few clicks. In a mere span of 3 days, you'll have your very own fine-tuned large model, and rest assured, all your data remains private, as we employ robust encryption measures to safeguard your information.

The Colossal-AI Platform stands as a true game-changer, offering a streamlined solution that democratizes AI model training and deployment, even for users without extensive computing resources. We are committed to minimizing training costs while maximizing training efficiency, all while prioritizing the security and privacy of user information.

We extend an open invitation to both enterprises and individuals to embark on a journey of exploration with our platform and to savor the unparalleled experience it brings to the world of AI. It's time to unlock the full potential of AI without the barriers of complexity and resource limitations. Experience the future with the ColossalAI Platform.

Comments