Finetune OpenSora

The OpenSora is a state-of-the-art, open-source text-to-video model developed by HPC-AI Tech. It offers high-quality video generation, customizable applications, and open-source community contributions, making it an ideal choice for building customized text-to-video applications. With one-click deployment and finetune solutions on HPC-AI.com, users can easily leverage the capabilities of OpenSora to create a tailored video generation model.

This guide will walk you through the process of how to finetune OpenSora on HPC-AI.com.

Create an Instance with the OpenSora Image

To get started with OpenSora on HPC-AI.com, follow the instructions here to create a new instance with the OpenSora image. When selecting an image, navigate to the Advanced Images section and choose OpenSora (1.2), then continue with the remaining steps to create the instance.

Finetune OpenSora Training Configurations

Once your instance is set up, you can begin finetuning the OpenSora model. The OpenSora image includes detailed configuration options for various training and inference tasks.

To access the configuration files, follow these steps:

- Connect to your instance using either the JupyterLab button or by copying the SSH command to connect via terminal.

- Navigate to the

~/Open-Sora/configsdirectory to find the configuration files.

This guide will use the configuration file located at opensora-v1-2/train/stage3.py within the ~/Open-Sora/configs directory to finetune a customized OpenSora model.

1. Dataset Configuration

dataset = dict(

type="VariableVideoTextDataset",

transform_name="resize_crop",

)

Explanation:

- Type: Specifies the type of training dataset. In this configuration, a variable-length video-text dataset is used, meaning the videos and text within the dataset can vary in length and format.

- Transformations:

transform_name="resize_crop"indicates the transformation applied during data loading. This process performs resizing and cropping to standardize the size of video frames.

Note: This guide uses preprocessed datasets available within the OpenSora image. To create a customized training dataset, please refer to the Data Processing Guide.

2. Bucket Configuration

This section specifies the configuration for the data storage bucket used during training.

bucket_config = {

"144p": {1: (1.0, 475), 51: (1.0, 51), 102: (1.0, 27), 204: (1.0, 13), 408: (1.0, 6)},

"256": {1: (1.0, 297), 51: (0.5, 20), 102: (0.5, 10), 204: (0.5, 5), 408: ((0.5, 0.5), 2)},

"240p": {1: (1.0, 297), 51: (0.5, 20), 102: (0.5, 10), 204: (0.5, 5), 408: ((0.5, 0.4), 2)},

"360p": {1: (1.0, 141), 51: (0.5, 8), 102: (0.5, 4), 204: (0.5, 2), 408: ((0.5, 0.3), 1)},

"512": {1: (1.0, 141), 51: (0.5, 8), 102: (0.5, 4), 204: (0.5, 2), 408: ((0.5, 0.2), 1)},

"480p": {1: (1.0, 89), 51: (0.5, 5), 102: (0.5, 3), 204: ((0.5, 0.5), 1), 408: (0.0, None)},

"720p": {1: (0.3, 36), 51: (0.2, 2), 102: (0.1, 1), 204: (0.0, None)},

"1024": {1: (0.3, 36), 51: (0.1, 2), 102: (0.1, 1), 204: (0.0, None)},

"1080p": {1: (0.1, 5)},

"2048": {1: (0.05, 5)},

}

Explanation:

- Definition: This configuration defines how videos of different resolutions are handled. Each resolution corresponds to a dictionary where the key represents the number of frames, and the value is a tuple that specifies the retention rate and the maximum batch size.

- Parameter Explanation:

- Retention Rate: Controls the probability of a video being assigned to a specific bucket.

- Maximum Batch Size: Determines the batch size for each bucket. For example, for a 102-frame video at 480p resolution, the maximum batch size is 3.

Recommendation: Choose an appropriate bucket configuration based on your dataset characteristics and hardware resources. For example, if your dataset contains many low-resolution videos, you can consider increasing the retention rate and maximum batch size for low-resolution videos.

3. Gradient Checkpointing

grad_checkpoint = True

Explanation: When memory is limited, it is recommended to enable gradient checkpointing to optimize memory usage during training.

4. Acceleration Settings

num_workers = 4

num_bucket_build_workers = 16

dtype = "bf16"

plugin = "zero2"

Explanation:

- num_workers: Determines the number of data sampling threads in a distributed setting. This configuration is suitable for a four-card data parallel setup (determined by the data parallelism level).

- num_bucket_build_workers: Specifies the number of threads used to build the bucket index. Increasing the number of threads can speed up index construction but also consumes more resources.

- dtype: Specifies the data type used.

bf16can reduce memory usage and computation time while preventing gradient overflow. - plugin: Refers to the ColossalAI acceleration plugin used.

zero2is an efficient distributed training technique that helps reduce memory usage.

5. Model Configuration

This configuration section outlines the setup for different models used within the OpenSora framework. When developing the image, it was assumed that users would pre-download model weights to the commonData directory. However, with the provided configuration settings here, model weights can now be directly downloaded from Hugging Face.

model = dict(

type="STDiT3-XL/2",

from_pretrained="hpcai-tech/OpenSora-STDiT-v3",

qk_norm=True,

enable_flash_attn=True,

enable_layernorm_kernel=True,

freeze_y_embedder=True,

)

vae = dict(

type="OpenSoraVAE_V1_2",

from_pretrained="hpcai-tech/OpenSora-VAE-v1.2",

micro_frame_size=17,

micro_batch_size=4,

)

text_encoder = dict(

type="t5",

from_pretrained="DeepFloyd/t5-v1_1-xxl",

model_max_length=300,

shardformer=True,

)

scheduler = dict(

type="rflow",

use_timestep_transform=True,

sample_method="logit-normal",

)

Explanation: The model names correspond to the following:

| Type | Model name |

|---|---|

| STDiT3-XL/2 | hpcai-tech/OpenSora-STDiT-v3 |

| STDiT3-XL/2 | hpcai-tech/OpenSora-STDiT-v3 |

| t5 | DeepFloyd/t5-v1_1-xxl |

6. Masking Settings

mask_ratios = {

"random": 0.01,

"intepolate": 0.002,

"quarter_random": 0.002,

"quarter_head": 0.002,

"quarter_tail": 0.002,

"quarter_head_tail": 0.002,

"image_random": 0.0,

"image_head": 0.22,

"image_tail": 0.005,

"image_head_tail": 0.005,

}

Explanation: By adding noise during training, the robustness of the model is enhanced.

7. Experiment Management

seed = 42

outputs = "outputs"

wandb = False

epochs = 1000

log_every = 10

ckpt_every = 200

- seed: Sets the random seed to ensure the reproducibility of the results.

- outputs: The output directory used to save log files and model checkpoints generated during training.

- wandb: Indicates whether to use WandB for experiment tracking.

- epochs: The total number of training epochs.

- log_every: Specifies how often (in terms of iterations) to print logs.

- ckpt_every: Specifies how often (in terms of iterations) to save model checkpoints.

8. Optimization Settings

load = None

grad_clip = 1.0

lr = 1e-4

ema_decay = 0.99

adam_eps = 1e-15

warmup_steps = 1000

Explanation:

- load: Specifies where to load the model weights from. If set to None, the model will be finetuned from scratch.

- grad_clip: The gradient clipping value to prevent gradient explosion.

- lr: The learning rate, which affects the convergence speed and performance of the model.

- ema_decay: The decay rate for exponential moving average, which smooths the model updates.

- adam_eps: The epsilon value for the Adam optimizer, preventing division by zero errors

- warmup_steps: The number of warmup steps for the learning rate, which helps prevent instability during the early stages of training.

Training Start Command

Example using the open-source Disney dataset:

- Upload the

Disney-VideoGeneration-Dataset.zipto the home directory. - Unzip the dataset.

- Upload the

modified_data.csvto the~/OpenSoradirectory.

torchrun --standalone --nproc_per_node 4 scripts/train.py configs/opensora-v1-2/train/stage3.py --data-path modified_data.csv

Explanation:

- --standalone: Allows torchrun to automatically configure environment variables (only for single-node mode).

- --nproc_per_node: Specifies the number of GPUs to be used.

- scripts/train.py: The training script provided by OpenSora.

- configs/opensora-v1-2/train/stage3.py: The path to the configuration file.

- --data-path: Specifies the path to the dataset.

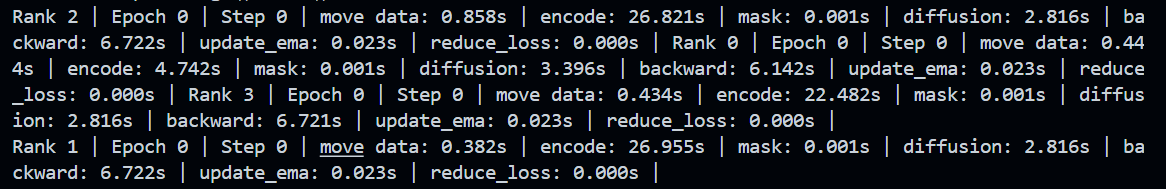

It indicates that the training process has started.